It’s similar but AI can learn from its mistakes. This is interesting though.

what you are describing does not at all seem like artificial intelligence to me

i mean, randomizers? that’s like, literally the opposite of ai.

like, this won’t even respond differently depending on what question you ask, it’ll just pick a random answer.

and most importantly, it won’t ever learn.

I mean- gimkit is Turing complete so an AI is possible. We dont have to use this weird version.

Since it has some block code it’s probably possible. I’m mean there are some stuff that’s pretty close to Ai on scratch which uses a different type of block code so it’s probably possible.

Maybe it could begin with a randomizer before it starts learning from that randomizer’s mistakes.

True. Buy after all this is just a concept. People can improve on this to make it actually seem like one.

yes but you still call it artificial intelligence

and this just isn’t true at all:

Yeah, that’s actually a good idea. You can add a link to this post and add your own thoughts as well.

What about randomness added onto previous decisions? Like, if you pick a five, the AI can only pick six or four.

AI lesson time-

An AI is a network of neurons, which decide what number from -1 to 1 to output, based on the input. The output of a single neuron can be represented by the sum of weights and biases:

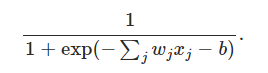

For each input a neuron has, there is also has a weight. The input value of the neurons are each multiplied by their weights, then added up. After this, a “bias” is also added. This can be represented in math, when x = inputs, w = weights, and b = bias, like this:

![]()

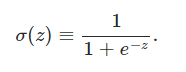

Finally, to get the output of a neuron, the sum is fed into the sigmoid function:

This is the full equation that represents this, where w = weights, x = inputs, and b = bias:

Many of these neurons are chained together in layers, usually with an input layer, a few hidden layers, and an output layer.

I would also explain the learning process of an AI, but I don’t understand it as it involves a lot of fun subjects like calculus. That’s how an AI works though, the methods described in the comments and in the post aren’t effective.

For anyone who wants a bit more than a summary, here, have an article:

https://neuralnetworksanddeeplearning.com/chap1.html

Nice guide and idea! I will be implementing this idea into my UNO game(will most likely make a guide about it)!

Well, sorry that i was wrong.

If–elif–else, that will make it possible, randomizers make AI not that smart, but logic, makes it smart.

It is accually very rigged, thats what it should be for, but it just gives it to random people who joined that month.

Yeah. That’s a bit complicated for gimkit, and if we want an even slightly complex ai, we’ll probably use a buncha memory.

Also, not to you but just in general, its not actually ai, but just machine learning.

i mean, randomizers? that’s like, literally the opposite of ai.

where is it supposed to start, if not random actions?

it won’t ever learn

it will though, the higher the success rate of a certain action, the higher likelihood for it to happen.

this is a simplified version of reinforcement learning, if you’ve ever seen that in action.

now you call this machine learning??? thats even worse!!!

that’s literally just what its called.

next time you wanna be rude, at least know what you’re talking about.

wait whar i thought this just said that it chooses one random choice and doesn’t increase the odds

nah that wouldn’t be machine learning that would be rng lmao

Reinforcement learning is basically the system receives rewards for accomplishing a goal, and punishment for going in the wrong direction. I don’t know the specifics, but I’m pretty sure this is the same, or at least it looks like it. Both the networks start out random, but as they do something better, they are more likely to do it in the future. Dunno about real neural networks, but here, we’d use a logarithmic chance growth, as we always want to leave room for mutation to improve even further to prevent the system from falling into a rut.

yeah i know but based on what the post said i thought it was just rng and they weren’t talking abt actual machine leraning

ah

yeah i have no idea what they meant at first, but its fleshed out into a nice idea now lol